This handbook provides a comprehensive overview of disinformation, including its different forms and the various techniques used to spread it. It also covers the basics of AI and synthetic media, including their potential applications and implications for democracy, within and beyond the electoral cycle.

What is synthetic disinformation and how does it threaten democracies?

In December 2023, the CPA published a Parliamentary Handbook on Disinformation, AI and Synthetic Media. The handbook provides a comprehensive overview of disinformation, including its different forms and the various techniques used to spread it. It introduces the concept of ‘synthetic disinformation’, a new era in the history of disinformation heralded by the emergence of artificial intelligence, and explores mitigation strategies for combating synthetic disinformation.

This extract from the handbook explains the key features of synthetic disinformation and outlines their consequences for democracies. Download the handbook below.

Introduction

The field of Artificial Intelligence (AI), a term coined by John McCarthy in 1956, has evolved significantly in the last decade, and is increasingly shaping the digital media environment. Generative AI models – which use machine learning to create new data that resembles the input – have been made available to the public at little-to-no cost, leading to a proliferation of audio-visual and textual content being shared online that has been created or altered using artificial intelligence.

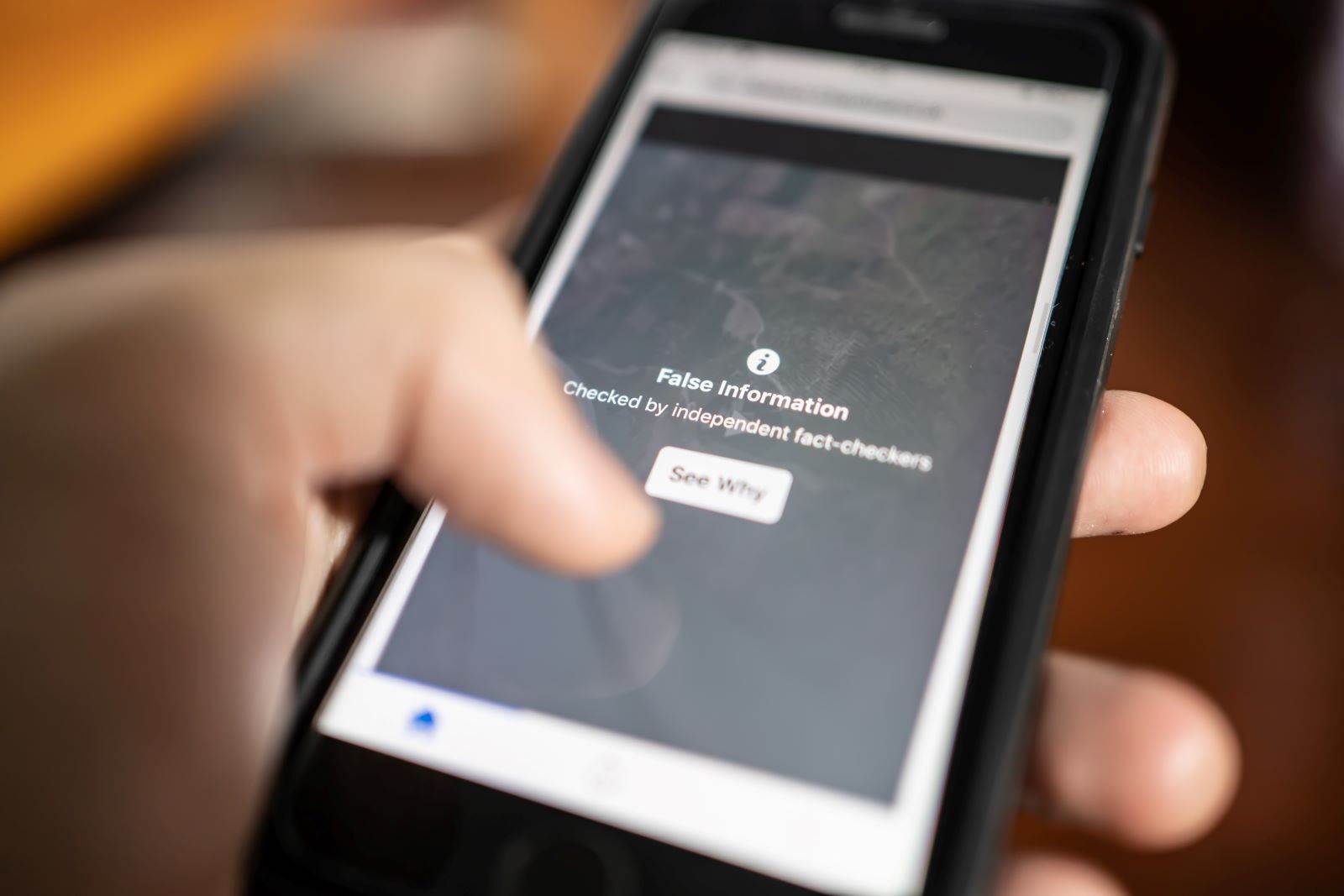

This AI-generated content, commonly known as ‘synthetic media’, has ushered in a new wave of complexities and challenges in combating online disinformation. A widely cited definition of disinformation is the intentional creation and/or dissemination of false or misleading online content. We build on this to define ‘synthetic disinformation’ as disinformation generated or enhanced via synthetic media techniques. Synthetic disinformation can, but not invariably, take the form of deepfakes, which uses deep learning to generate realistic videos, images, and audio of people doing or saying things they have never said. Synthetic media, and their underlying generative AI technologies, have significantly lowered the barrier to entry for producing and disseminating disinformation, threatening to turbocharge its scale, reach, and potential impact.

What is new about the threat of disinformation in the age of synthetic media? While disinformation spans centuries and has already been transformed over the decades in the era of digital media, synthetic media accelerates the generation, distribution, and potential impact of disinformation. Notably, it deepens the erosion of citizens’ ability to make informed decisions about who to trust for information and guidance and what to believe.

Download the Parliamentary Handbook on Disinformation, AI and Synthetic Media

Features of synthetic disinformation

Accessibility and efficiency

The accessibility and efficiency of creating synthetic disinformation are unprecedented. In Nina Schick's (2020) book Deepfakes, she writes that ‘“Hollywood-level special effects will soon become accessible to everyone. This is an extraordinary development with unforeseen implications for our collective perception of reality.” With advancements in generative AI and the public release of text-to-image, text-to-video, and models with multi-modal capabilities like OpenAI’s GPT*4, synthetic media is becoming democratised. The ability to create and purvey convincing synthetic media and disinformation at scale is available to the masses and no longer limited to developers.

The widespread accessibility and efficiency of these technologies amplify the potential for misuse, as it allows a wider range of actors, from state-sponsored entities to individuals, to participate in the creation and spread of synthetic disinformation at scale. The world is already experiencing the effects of this democratisation, with instances of synthetic disinformation that have gone viral in recent years, such as the synthetic image of Trump being arrested by New York City law enforcement (see below), created by individuals using tools like Midjourney.

Making pictures of Trump getting arrested while waiting for Trump's arrest. pic.twitter.com/4D2QQfUpLZ

— Eliot Higgins (@EliotHiggins) March 20, 2023

Hyper-realism

In her seminal 1979 work "On Photography," Susan Sontag writes that photographs are often mis-perceived as 'miniatures of reality' that provide 'incontrovertible proof that a given thing happened.' The advent of hyper-realistic synthetic media, particularly contextually believable deepfakes, underscores the necessity for public scepticism and a critical reassessment of the adage 'seeing is believing.' Such synthetic media, capable of creating highly lifelike depictions of events or actions that never actually took place, intensifies the challenges Sontag identified with traditional photography.

This evolution in media technology, marked by its ability to distort reality convincingly, presents a pressing issue for modern information consumption and necessitates a heightened awareness and understanding among Parliamentarians and the public alike. Reputational damage is a significant consequence of this hyper-realism. For example, a deepfake audio recording circulated in the lead up to Slovakia’s 2023 general election depicted Progressive Slovakia Party leader Michal Simecka discussing buying votes from the Roma minority and cracking a joke about child pornography. Deepfakes can cause serious reputational damage during elections and in public life, which can have downstream consequences such as distorting citizens’ views of candidates and voting decisions.

Scalability

With advancements in artificial intelligence and machine learning, the production of synthetic media can be automated, enabling the creation of large volumes of disinformation at an unprecedented scale. For instance, large language models can quickly generate credible-sounding disinformation at scale, alleviating the burden of relying on humans to manually write disinformation posts, scaling up the automatic generation of text to produce and spread large quantities of disinformation in seconds.

For instance, in April 2023, NewsGuard identified 49 websites across seven languages that are using AI to generate large volumes of clickbait articles for advertising revenue. These sites, usually lacking clear ownership disclosure, appear to be designed for revenue generation through programmatic ads, similar to earlier human-operated content farms, but with the added capability and scalability enabled by AI technology.

This scalability has deleterious consequences for democracies. Notably, it not only potentially increases the volume of disinformation on social media but also allows new entrants into the disinformation game, overwhelming governments, platforms, and fact-checkers. The scalability is further enhanced by the fact that once a synthetic media model is trained, it can generate new content with minimal incremental cost. This means that a single piece of synthetic media, such as a deepfake video, can be the template for generating countless variations, each personalized to target different groups or individuals.

Personalisation and hyper-targeting

Synthetic media's ability to be hyper-targeted and personalised is of particular concern in the context of disinformation and its impact on democracy. Existing research indicates that disinformation often 'preaches to the choir,' reinforcing rather than changing individual attitudes. This phenomenon may be explained by confirmation bias or motivated reasoning, where individuals tend to favour information that confirms their pre-existing views. When synthetic media is personalised, it exploits this bias, making disinformation more likely to find a receptive audience among those already inclined to believe it. People are more likely to engage with and share content that resonates with their existing opinions, threatening to deepen ideological divides and fortifying extreme views. This poses a significant risk of catalysing anti-democratic actions, as seen in events like the January 6th Capitol attack in 2021 and the Canadian Truckers' convoy protest in 2022. In this context, synthetic media not only threatens to degrade the quality of democratic debate but also potentially hardens false beliefs, with serious downstream effects.

Liar’s dividend

Synthetic media also introduces the ‘liar's dividend,’ a term by Danielle Citron and Bobby Chesney that refers to the strategy where malicious actors weaponise citizens’ scepticism around deepfakes and synthetic media to discredit genuine evidence, thereby protecting their own credibility. As Chesney and Citron write, "Put simply: a sceptical public will be primed to doubt the authenticity of real audio and video evidence.” The liar’s dividend has already surfaced strongly in the courtroom. For instance, two defendants on trial for the January 6 attack on the U.S capital attempted to argue for the unreliability of a video showing them at the Capitol on the basis that it could have been AI-generated. The combination of deepfakes and the ‘liar’s dividend’ can inject uncertainty and doubt into public opinion, muddying citizens’ ability to make informed decisions about what to believe.

The 'liar's dividend' can be used in various contexts, including democracies. For instance, if Trump said that the notorious ‘Access Hollywood tape' was a deepfake, it is plausible that a significant percentage of the population would believe it in the age of synthetic media. Politicians and public figures can manipulate information to protect their interests and rehabilitate their image, creating an uncertain environment that makes truth discernment difficult for citizens and may engender downstream effects such as eroding trust in institutions and the media. The mere knowledge that such sophisticated distortion and visual rewriting of reality is possible may erode trust in all media, permitting actual disinformation to hide behind the doubt cast on legitimate information. In this environment, discerning truth becomes increasingly challenging, as synthetic media is weaponised distort the information environment undermine reality at scale.

Watch: A TED Talk by Danielle Citron, co-creator of the term 'liar's dividend'

Conclusion

Ultimately, the features of synthetic disinformation are nuanced and multi-pronged. On one hand, believing fake content can undermine the epistemic quality of democratic discourse and citizens’ decision-making, damaging reputations and disrupting democratic processes. On the other, dismissing authentic information as synthetic helps wrongdoers evade accountability, fostering cynicism, undermining trust and eroding a collective sense of reality. As computer science professor Hany Farid writes for an article in the New York Times, “The specter of deepfakes is much, much more significant now — it doesn’t take tens of thousands, it just takes a few, and then you poison the well and everything becomes suspect.”